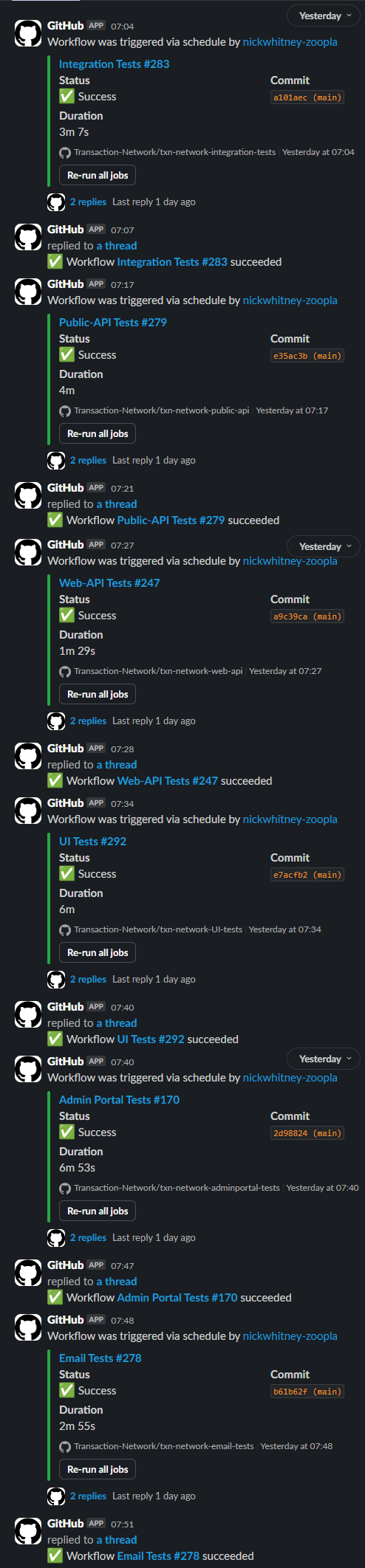

Public API

Next up our Public API. Every endpoint tested with each possible response, i.e. Success / Bad Request / Not Found / Unauthorised etc, as applicable. Again in C# with RestSharp and xUnit. Specifically the log file clearly listed each endpoint, expected and actual reponses with failures repeated in a summary section which provided quick identification of problems. A public stream writer variable was created in the xUnit fixture and made available to all [Fact]s.

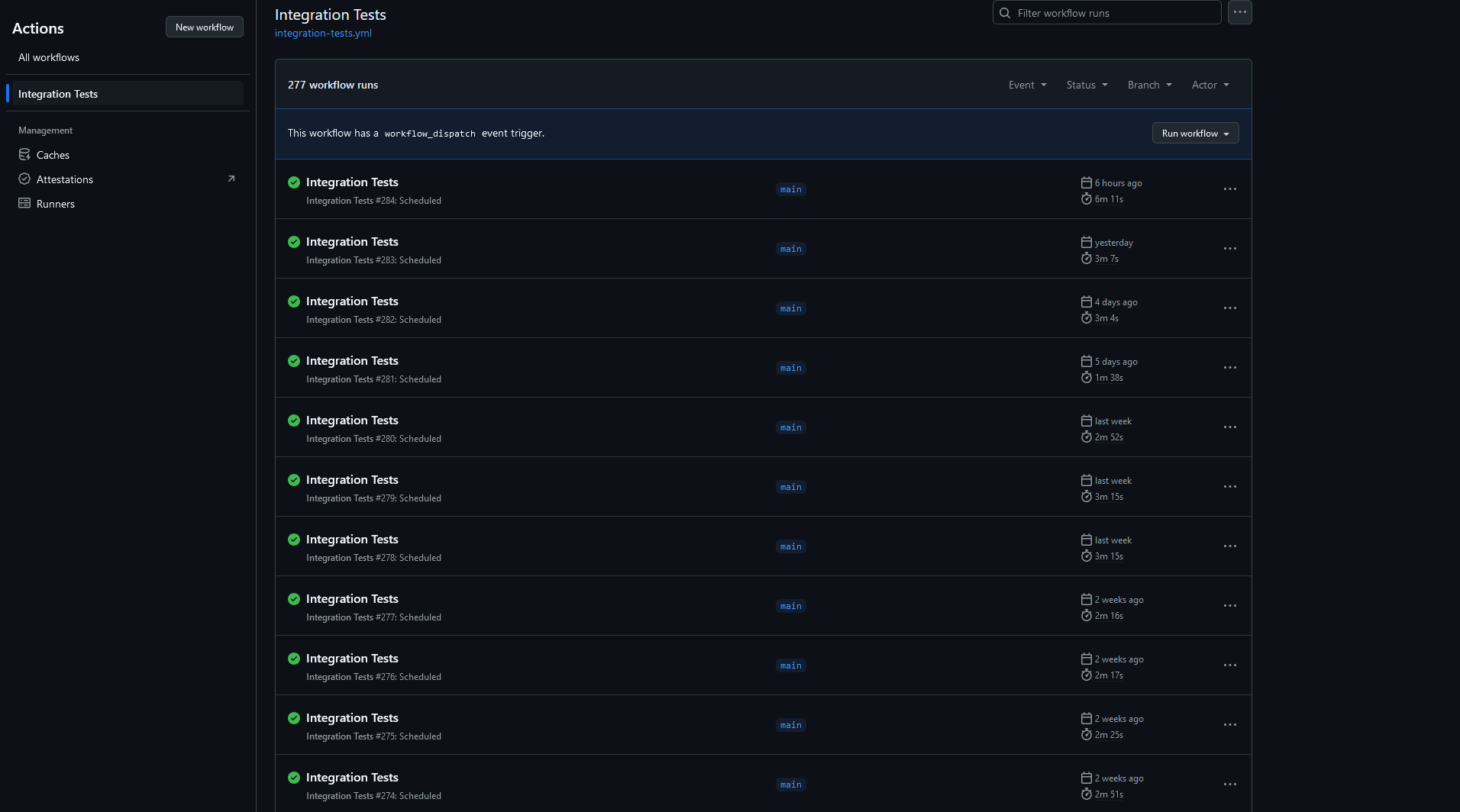

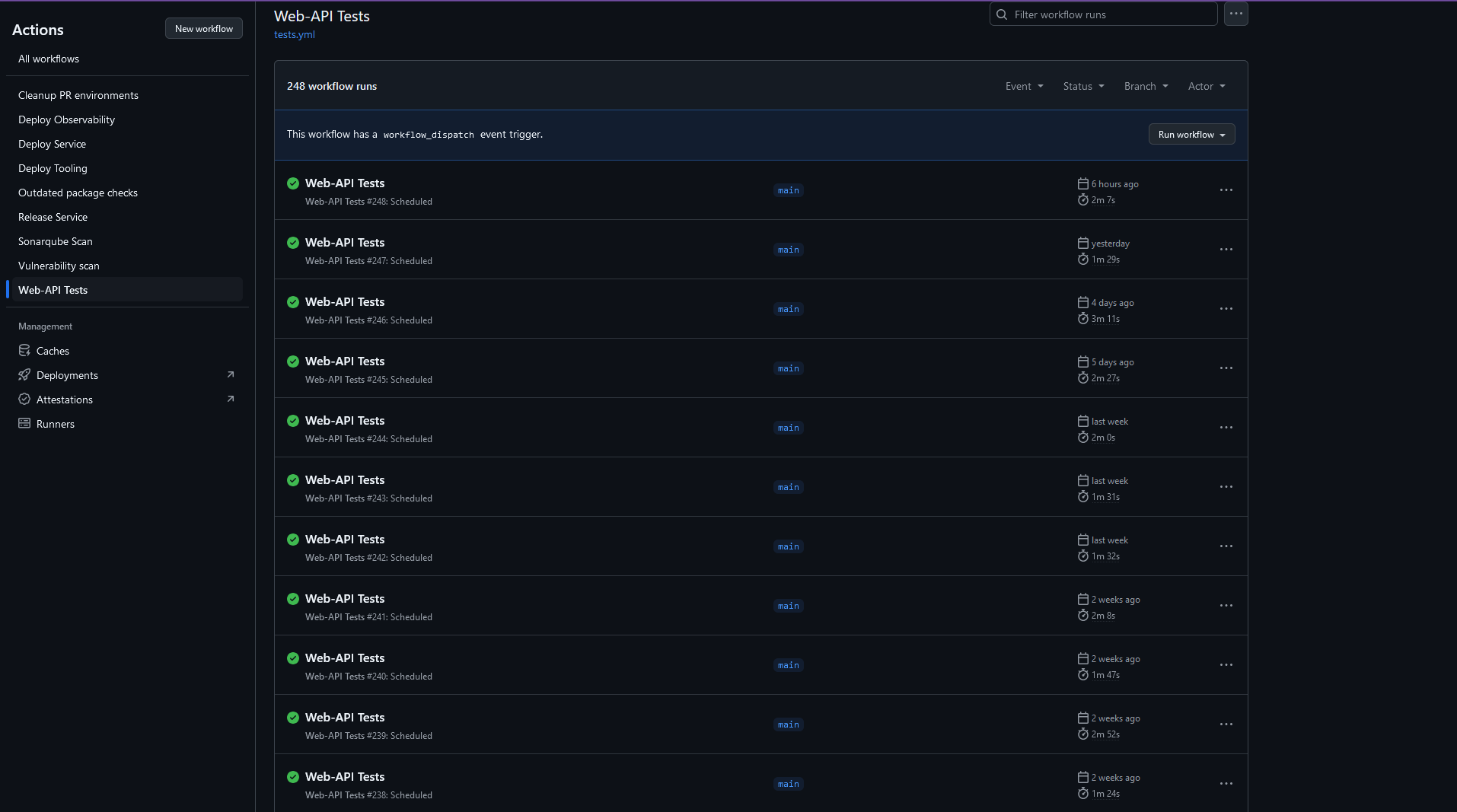

Web API

Next the Web API, who's purpose is to serve our UI.

Same approach, coverage and implementation as the Public API.

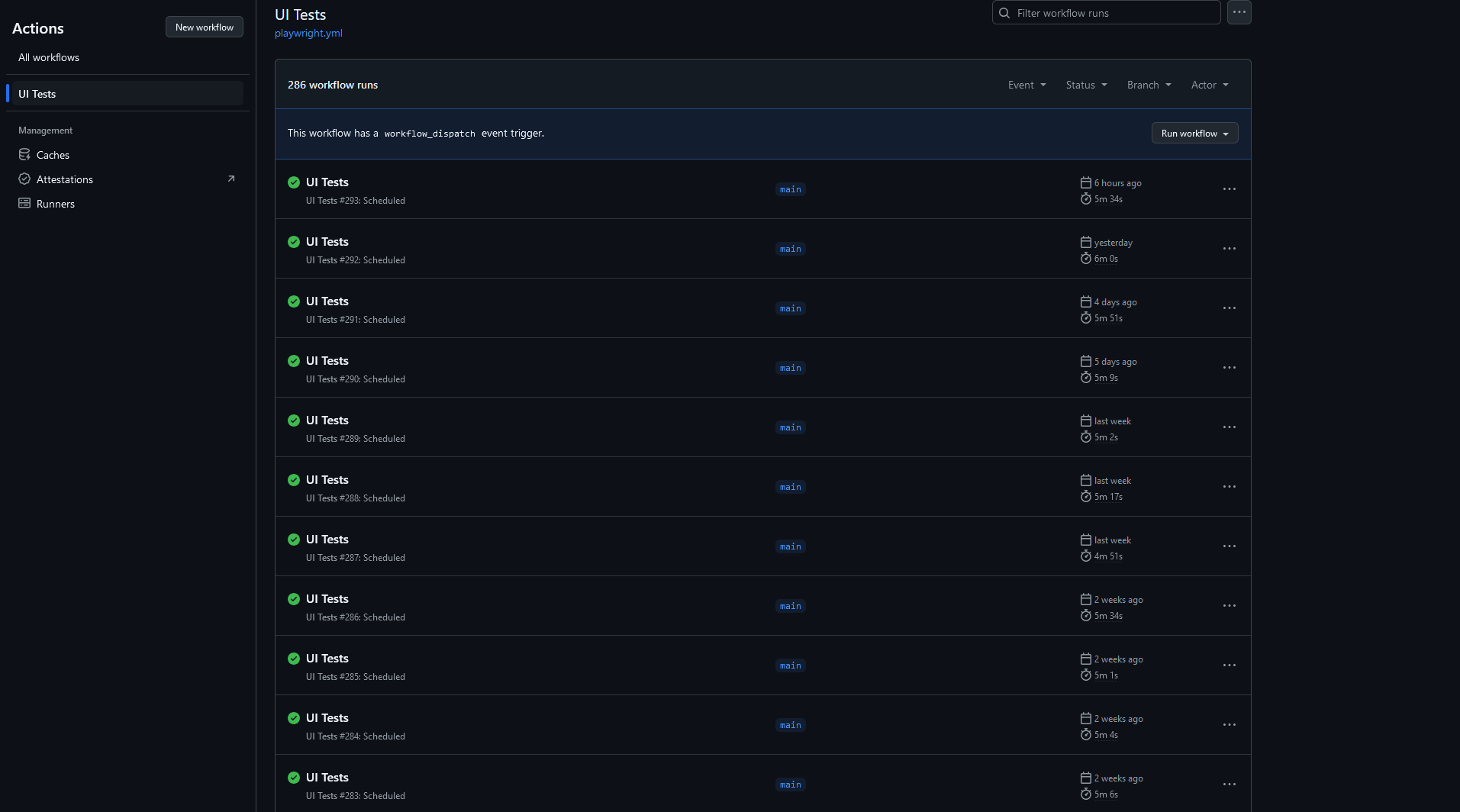

Web UI

Then UI automation, implemented in Playwright with Typescript. Our company was using Playwright elsewhere and I had heard good things about it. Multiple scenarios with alternative options covered, each with expected UI elements verified.

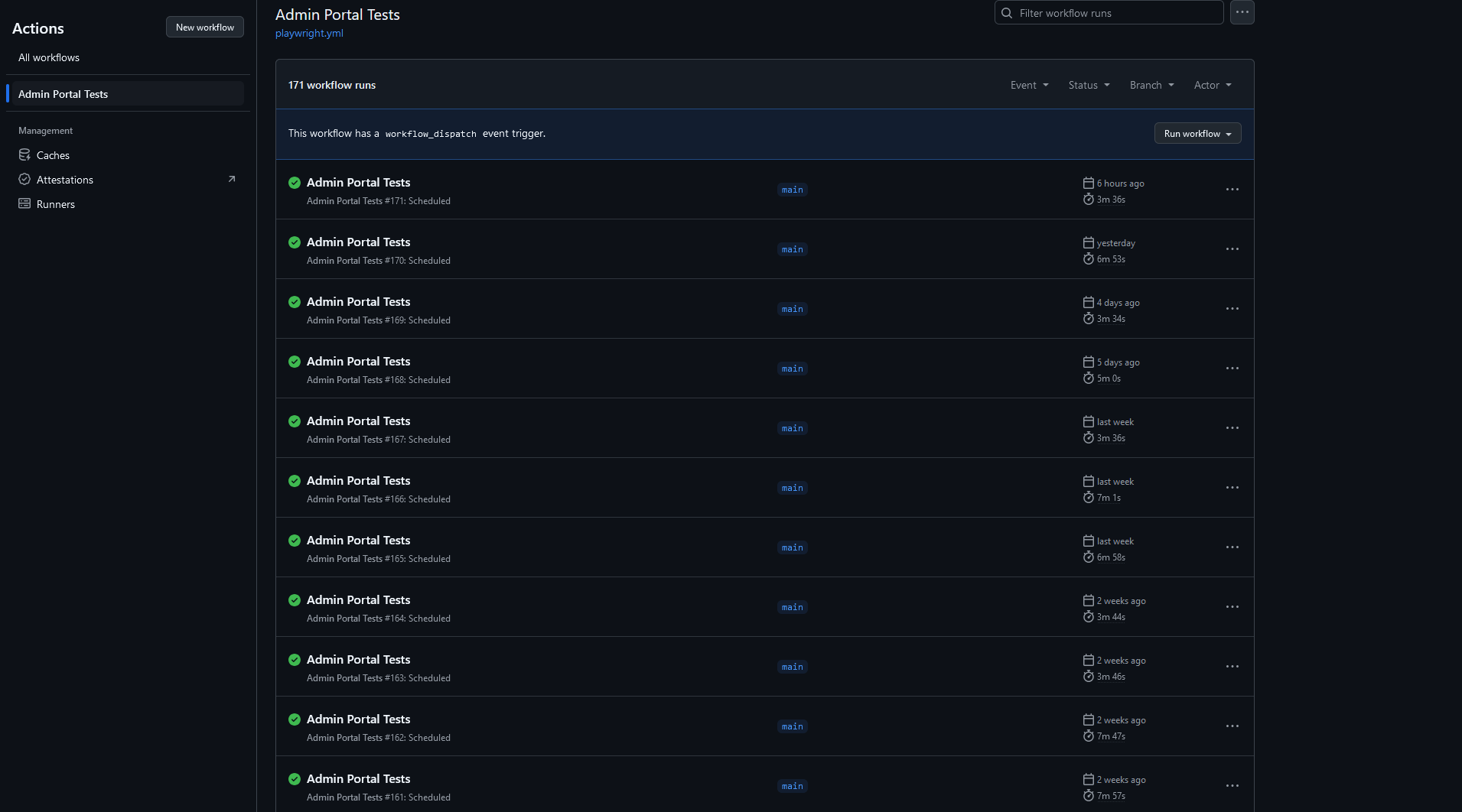

Admin portal

Our admin portal was also tested with Playwright. Every page visited and verified for elements and data as appropriate.

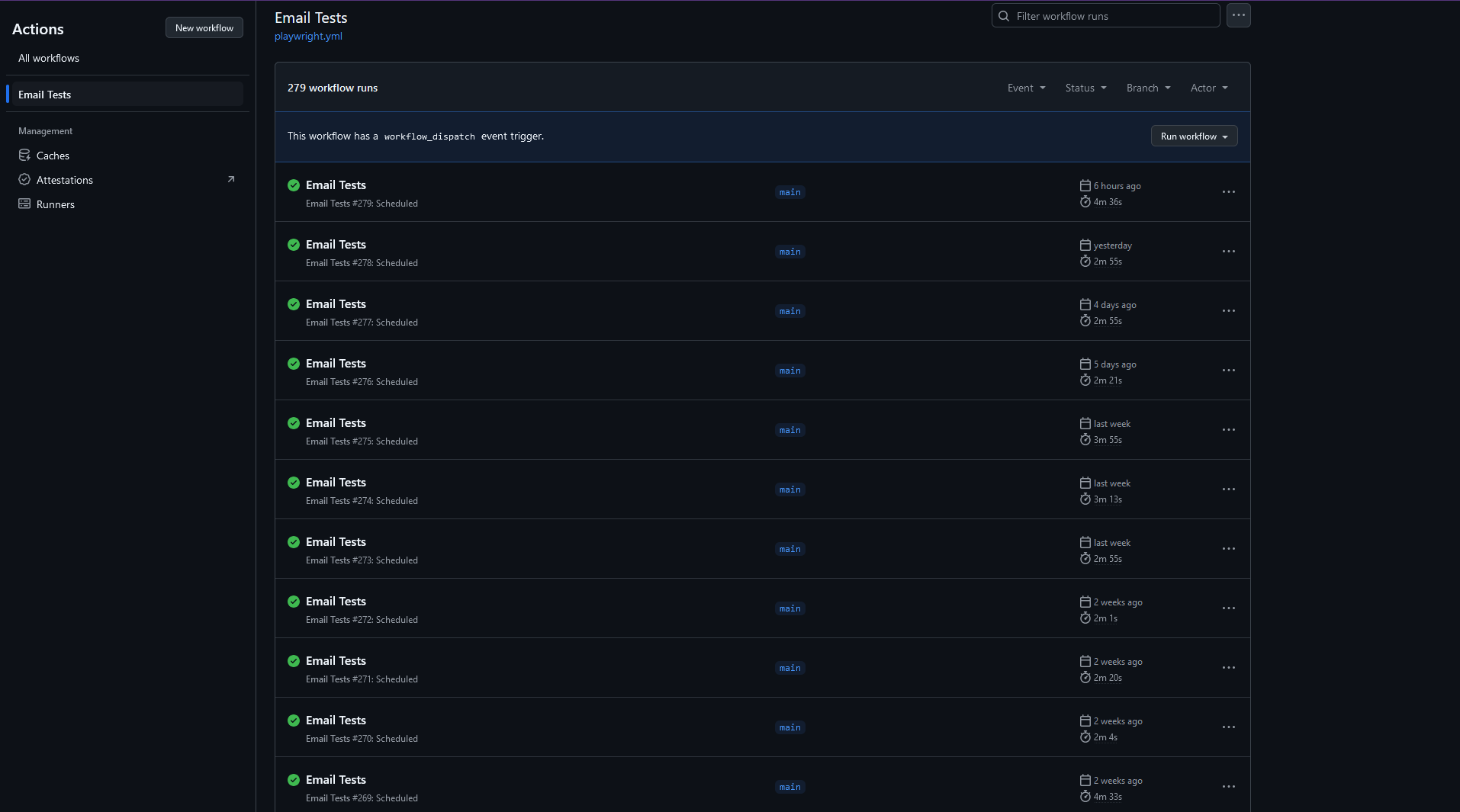

Email tests

Sent to Mailinator and retrieved via API, HTML was then rendered in a browser window and screenshot matched using Playwright's pixelmatch library. This had the added advantage of testing alignment, font and image changes. An idiosyncracy was that sometimes the image generated on GitHub would differ from that produced locally (mainly due to font rendering). So I took the screenshot from the failed run and upload it as the expected result, which worked well.

Anything else?

- A test for integrator response duration. Results were logged to file with timestamps and metrics encapuslated in [] which allowed reliable Regex to extract values. I then wrote a Powershell tool that used the GitHub API to read artifacts, download selected logs, extract the metrics and export them to CSV.

- An "API tool" in Powershell that provides a Windows GUI for all functions of our API. Testing is then much quicker than editing values in Postman JSON payloads.

- Load testing an application with Playwright and Artillery running on AWS Fargate.

- A bespoke webhook analyser which verified the requests and their payloads then posted the results to Slack.